Listen here on Spotify | Listen here on Apple Podcast

Episode released on May 2, 2024

Episode recorded on February 7, 2024

Chaopeng Shen is a Professor at Penn State University and leads the Multiscale Hydrology, Processes, and Intelligence (MHPI) Group. His group focuses on data-driven and process-based models to support decision-making from catchment to global scales. Applications include rainfall-runoff, routing, and ecosystem and water quality modeling.

Highlights | Transcript

- Artificial Intelligence (AI) is a general term referring to the use of computers/machines to imitate human-like behaviors (Sun and Scanlon, 2019)

Machine learning (ML) is a branch of AI that aims to train machines to learn and act like humans and to improve their learning through data fusion and real-world interactions,

Deep Learning (DL) refers to a newer generation of ML algorithms for extracting and learning hierarchical representations of input data - Definition of Big Data: National Institute of Standards and Technology: 5 Vs: high volume, variety (excludes repeated data with little information), velocity (how rapidly you can train the neural network), veracity (consistency, accuracy, quality, and trustworthiness), and variability (data inconsistencies).

- Unstructured data: emails, text, images, audio, video etc represent an important data source not traditionally used a lot in science.

- Examples of AI/ML: self driving vehicles, smartphones, ChatGPT

- AI/ML will become very commonplace and continue to expand its user base, like our cell phones, nothing special.

- Differences between AI/ML and traditional statistics: AI/ML can handle much more complex interrelationships with multiple dependencies and people can apply AI/ML without fundamental understanding of stats. Some AI/ML algorithms like stats, such as cluster analysis etc.

- AI/ML has been advancing beyond the black box notion to include explainable AI and differentiable AI that is more interpretable and transparent.

- Examples of applications of AI/ML:

- Image recognition: original, deep learning, convolutional neural networks built to read an image or identify certain pixels (segmentation), applications remote sensing (e.g., land use change, like open graph (OG) image recognition)

- Time series analysis: LSTM (long short-term memory) algorithm learns a response function from input data (e.g, weather input translate to soil moisture or streamflow etc)

- Example datasets for training CAMELS (Catchment Attributes and Meteorology for Large-sample Studies) dataset, ~ 600 basins across the United States (Addor et al., 2017).

- ALICE: Automated Learning and Intelligence for Causation and Economics: Microsoft, directs AI toward economic decision making.

- Example application: predicting water quality, input weather data (Precip., Temp., Radiation etc), watershed characteristics (slope, soil texture etc) to output water quality using LSTM. Apply to 100s of watersheds to avoid overfitting by limiting to few watersheds. Regularization used with various watershed parameters to reduce model errors.

- Multi-objective predictions: use same model to predict multiple parameters.

- Supervised machine learning: learning a mapping relationship between inputs and outputs (x and y) using deep neural networks (DNN), or transformers etc. Boosted regression tree repeatedly fits many decision trees to reduce model uncertainty. Example: rainfall runoff, rainfall input, runoff output, train with rainfall and runoff data.

- Unsupervised machine learning: learn the patterns in the data, how data organized, no input and output or differentiation between x and y. Example like principal component analysis, direction of change to explain data variance. Example: combine weather and runoff data and treat as single dataset.

- Weakly supervised machine learning: e.g., ChatGPT, BERT (Bidirectional Encoder Representations from Transformers), fill the gap using joint distribution in data. Example: look at soil moisture data and try to predict weather by filling in the gap. Post problem, multiple realizations.

- Speech recognition AI, converts speech to text.

- ChatGPT: helps students with writer’s block

- Hydrologists traditionally used process-based modeling (PBM): input, theoretical constraints (e.g., conserve mass), calibration, parameterization, output. Theory may be incorrect. Our assumptions may introduce bias. Lot of parameters introduce non-uniqueness (aka equifinality - Beven, 2006). Advantages: know theory and can apply to places with little data. Can explain input – output relations, interpretable. Can extrapolate based on theory.

- AI/ML: can deal with massive amount of data efficiently, could have trillion different weights rather than few parameters in PBMs. AI/ML may learn the wrong relationship, returning good results for the wrong reason.

- Hybrid of AI and process-based modeling: maybe hybrid like EVs, hybrid, ICE (Internal combustion engine), use AI to parameterize process-based models.

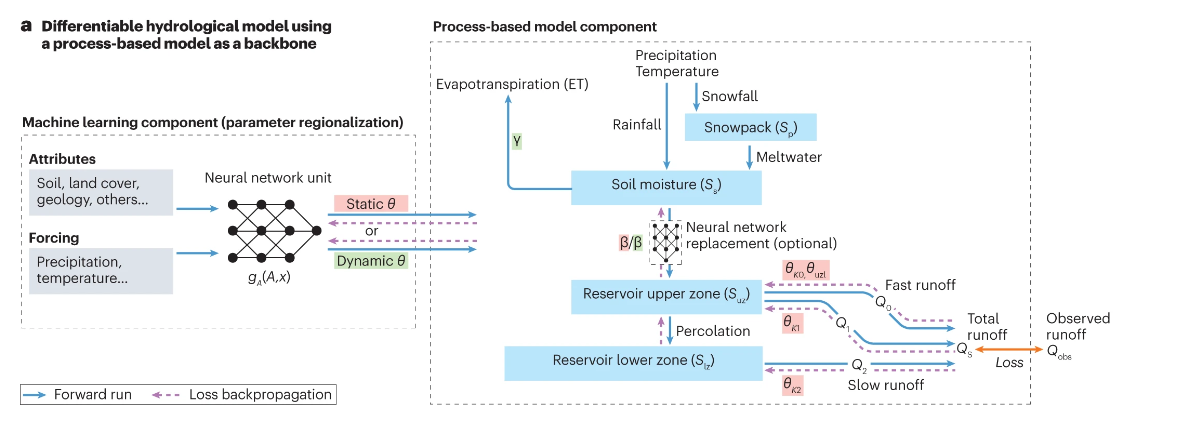

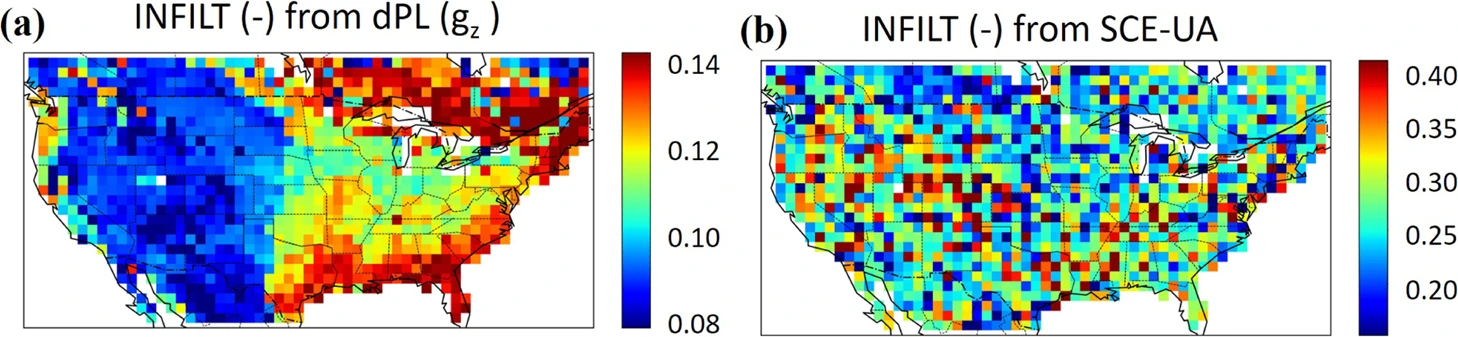

- Differentiable programming: connecting prior physical knowledge to neural networks, expanding physics informed AI (Shen et al. 2023). Gradient optimization to back propagate. Make PBMs differentiable and combine with neural networks. Use it for parameterization or modeling a missing process. Can use automated differentiation programming provided by AI.

- Apply this hybrid approach in next generation National Water Model.

- Hybrid approach: advantages of both, process large amounts of data rapidly with process clarity and applicability in data sparse regions.

- Workforce development, need to train next generation starting in high schools, undergraduates. Need diversity.

Tutorials: https://mhpi.github.io/codes/ (Starting point: Quick LSTM tutorial on soil moisture prediction & updated, simplified LSTM tutorial for CAMELS streamflow)

Similarities and differences between purely data-driven neural networks and process-based models. Please seek this link for discussion

https://bit.ly/3TTSVC7