Listen here on Spotify | Listen here on Apple Podcast

Episode released on February 12, 2026

Episode recorded on January 12, 2026

Alex Sun discusses the evolution of AI/ML over the past decades and resources for hydrology.

Alex Sun discusses the evolution of AI/ML over the past decades and resources for hydrology.

Alex Sun is a Data Scientist at the Department of Energy National Energy Technology Lab and previously worked as a Senior Research Scientist at the University of Texas at Austin, focusing on applications of AI/ML to solve hydrologic problems using remote sensing, modeling, and monitoring data at global to regional scales.

Highlights | Transcript

General Applications and Terminology

- Artificial intelligence and machine learning are pervasive in our work and lives, as exemplified by self-driving vehicles that combine sensors (LiDAR, cameras, GPS), deep learning, and real-time decision-making to replicate human driving behavior (Tesla, Waymo).

- Terminology:

- Artificial Intelligence: generic term referring to the use of computers to imitate human-like behaviors.

- Machine Learning: subset of AI that trains models to learn or reproduce human thinking to solve real-world problems.

- AI/ML evolved markedly over past decades:

- 1990s: statistical and ML era, learning from data: Bayesian networks, Support Vector Machine, decision trees (e.g., Random Forest, etc.)

- Early 2000s: neural networks and deep learning

- 2010s on: deep learning breakthroughs: Convolutional Neural Networks (CNNs)

- Mid 2010s: word embeddings, Long-short term memory (LSTM) renaissance in hydrology (originally introduced in 1997, Hochreiter and Schmidhuber, Neural Comp., 1997)

- 2017 – 2020: 2017: Transformer models. Attention is all you Need paper by Vaswani et al., 2017, Attention based transformers formed main support for language, vision, and multimodal models.

- 2020s: Multimodal AI (text and image); ChatGPT (Chat Generative Pre-trained Transformer), conversational AI

- 2024 onwards: Agentic, Multimodal, and Infrastructure AI: Attention (plan, use tools, act), Multimodal models (text, image, social media), Foundation models (domain specific)

- Deep learning advanced substantially in early 2010s: e.g., convolutional neural networks (CNNs) (Goodfellow et al., Deep Learning, MIT Press, 2016).

- CNNs allowed automated extraction of features from images and complex datasets (LeCun et al., Deep Learning, Nature, 2015).

- Hierarchical model layers learn compact representations of high-dimensional data, reducing manual predictor selection.

- Foundation models power today’s generative AI

Large-capacity models trained on massive datasets enable “emergent behavior” of the foundation models. - Classic machine learning models are still highly relevant

- Methods such as random forests and support vector machines are still widely used. They are particularly useful when data or computing resources are limited.

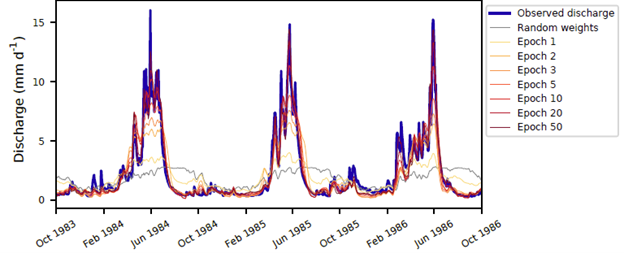

- LSTM models are very valuable for hydrology (Fig. 1)

Long short-term memory networks mirror hydrologic storage and memory processes, making them well-suited for rainfall–runoff modeling (Kratzert et al., LSTM, HESS, 2022).

- AI development is tightly coupled to hardware advances:

- Latent representations enable generalization across regions. High quality representation learning requires large-volume, cross-domain training data. Deep learning can map heterogeneous systems (e.g., river basins) into a shared low-dimensional space for transferable modeling.

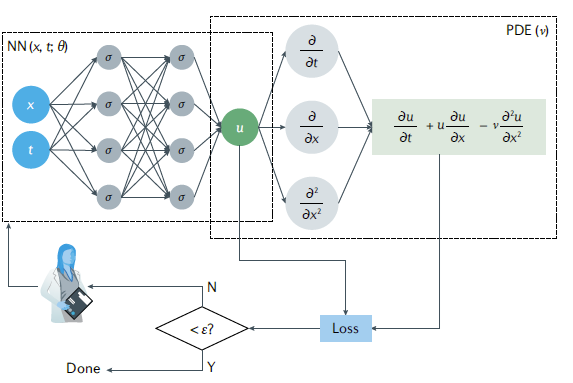

- Physics-informed ML improves interpretability and realism (Fig. 2).

Embedding physical constraints (e.g., conservation laws) into data-driven models helps ensure physically meaningful results (Karniadakis et al., Physics Informed Machine Learning, Nature, 2021). - Ensemble modeling increases ability to sample extreme events and improves uncertainty estimation. Using multiple models trained on the same data often yields more robust predictions than relying on a single “best” algorithm.

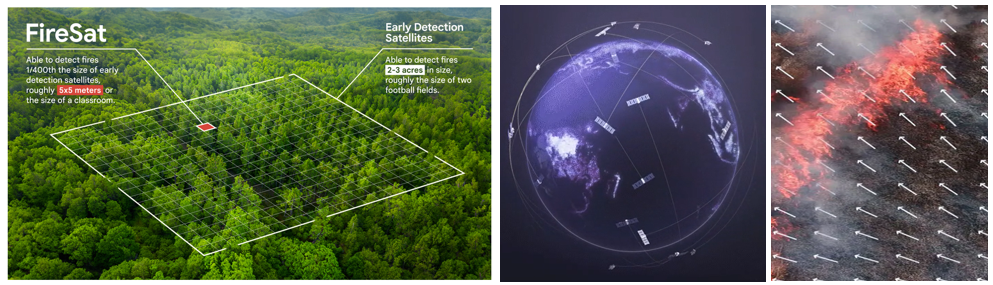

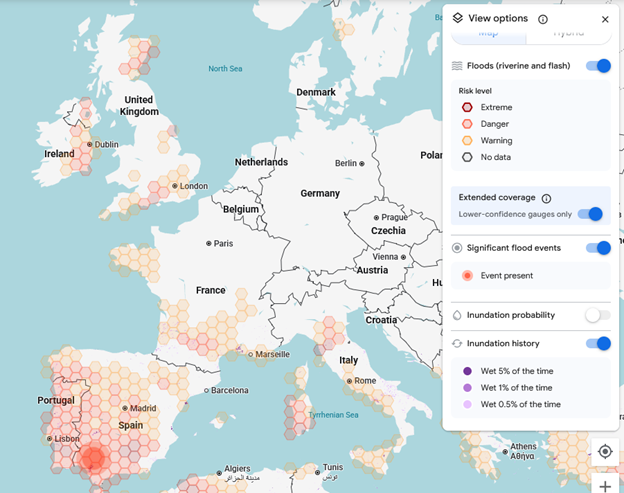

- AI enhances real-time hazard management by fusing multisensory, multimodal data.

Flood forecasting and evacuation planning increasingly rely on AI to integrate sensor data, remote sensing, and social media feeds (e.g., Google, Crisis Response) (Figs. 3 and 4). - Remote sensing provides big data in Earth science, such as Landsat data, MODIS, and Sentinel.

- AI/ML helps fuse remote sensing products with different spatial and temporal resolution, e.g., Landsat high spatial low temporal resolution with MODIS high temporal low spatial resolution (NASAs Harmonized Landsat Sentinel 2, HLS) (Fig. 5).

- Global foundation models enable applications

Models such as Google’s DeepMind AlphaEarth create high-resolution (10 m) annual (from 2017) global coverage of land by fusing Landsat 8/9, Sentinel-1 (SAR), Sentine-2 (optical) Earth representations that can be applied for environmental analysis. - AI resource use is a growing concern

Training and operating large models requires substantial electricity and water, raising sustainability questions for data centers. 10 to 50 medium size large language model queries would use up about a half a liter of water and maybe 50 watt hours of electricity. - Domain expertise is critical for reliable application of AI/ML, including validating AI outputs, interpreting results, providing ethical oversight for AI-generated content and even decisions.